Today, many developers find themselves working on multiple projects at the same time, often dealing with unfamiliar codebases and programming languages, and being pulled in many directions. This is backed up by the 2023 "State of Engineering Management Report" by Jellyfish which found that:

60% of teams reported being short of engineering resources needed to accomplish their established goals

This is an area where generative AI has the promise to add real value. The latest Gartner research backs this up, with the planning assumption that:

75% of enterprise software engineers will use AI coding assistants, up from less than 10% in early 2023

With this in mind, enterprises need to prepare now for the inevitable wide scale adoption of these tools, and start taking advantage of the benefits they can offer. In this post, we look at some of the core features of the GenAI services available today on AWS, that help to provide the next generation developer experience.

Source: https://dev.to/aws-heroes/using...

Responsible AI

Although not directly part of developer experience, the first area to address is Responsible AI. This is because the rise of generative AI has led to concerns around the ethics and legality of the content generated. The risk is heightened by the continuing legal actions taking place, including the ongoing class action lawsuit against GitHub, OpenAI and Microsoft claiming violations against open-source licensing and copyright law.

The professional tier of Amazon CodeWhisperer is covered by the "Indemnified Generative AI Services" from AWS. This means - quoting from the AWS Service Terms - that

AWS will defend you and your employees, officers, and directors against any third-party claim alleging that the Generative AI Output generated by an Indemnified Generative AI Service infringes or misappropriates that third party’s intellectual property rights, and will pay the amount of any adverse final judgment or settlement

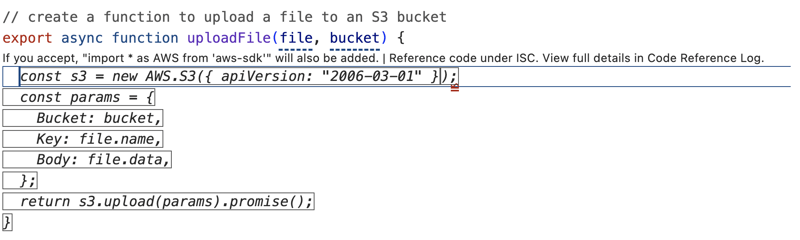

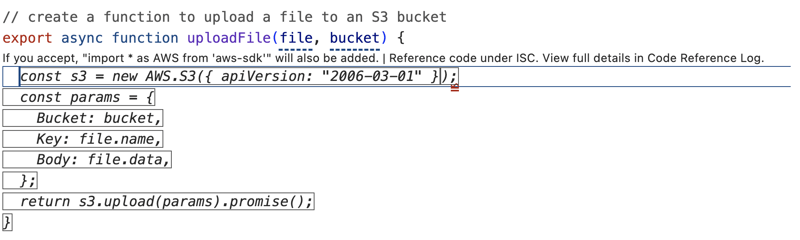

Amazon CodeWhisperer provides a reference tracker that displays the licensing information for a code recommendation. This allows a developer to understand what source code attribution they need to make, and whether they should accept the recommendation.

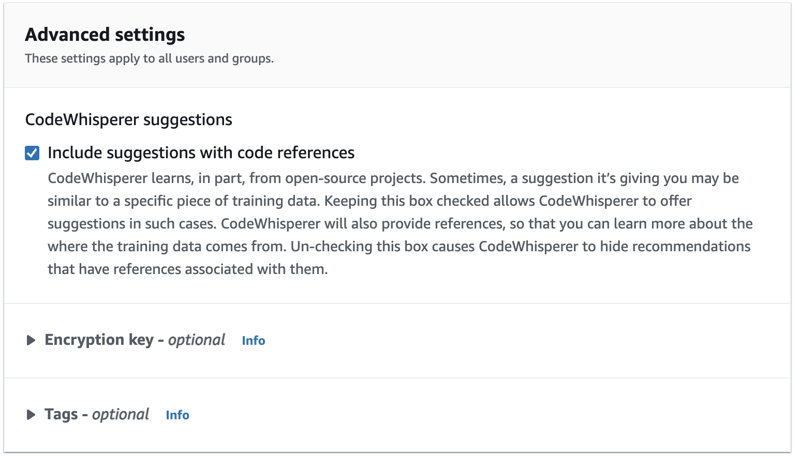

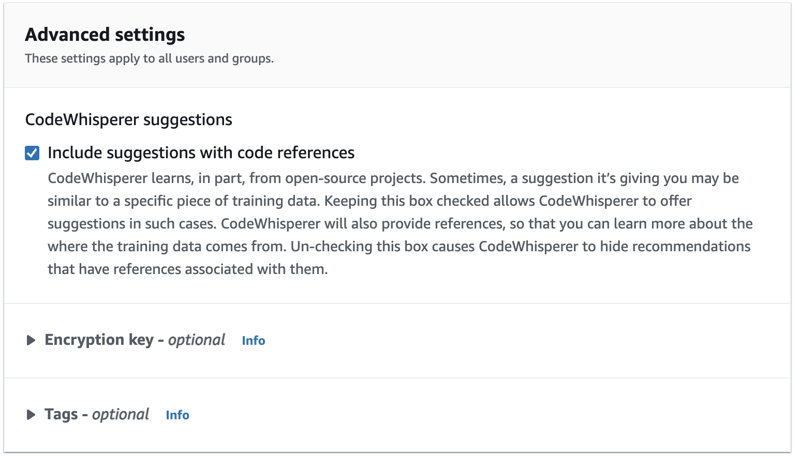

However, to ensure that you are indemnified, you need to remain opted-in (default) to include suggestions with code references at the AWS Organization level within the CodeWhisperer service console.

AWS also address the toxicity and fairness of the generated code by evaluating it in real time, and filtering our any recommendations that include toxic phrases or that indicate bias.

Code Creation

One of the primary goals of AI Coding Assistants is to increase the productivity of developers in creating code. In this section, we break down this capability into different categories, separating out programming languages, from Infrastructure-as-Code tools, SQL and shell script commands in the console.

Code Completion

With code completion, CodeWhisperer makes suggestions inline as code is written in the IDE. This has been around for some time, and is known by the term IntelliSense in Visual Studio Code.

The challenge with code completion is that developers initiate the process by writing code and they are driving the implementation detail.

Code Generation

With code generation, a developer writes a comment in natural language giving specific and concise requirements. This information, alongside the surrounding code including other open files in the editor, act as the input context. CodeWhisperer returns a suggestion based on this context.

Amazon CodeWhisperer is trained on billions of lines of Amazon internal and open source code. This gives CodeWhisperer an advantage when it comes to making suggestions for using AWS native services. In the example below, CodeWhisperer understands from the input context that we want to create a handler for an AWS Lambda function, and suggests a correct signature and function implementation.There are techniques to help you generate the best recommendation, and you can find out more details in this blog post on Best practices for prompt engineering with Amazon CodeWhisperer.

Customizations

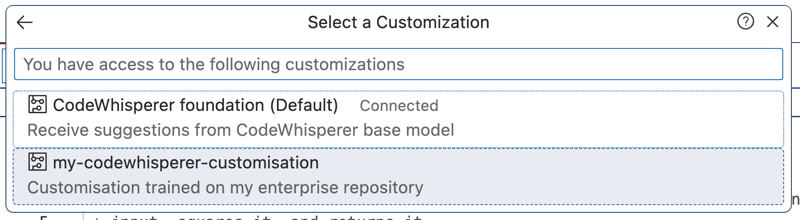

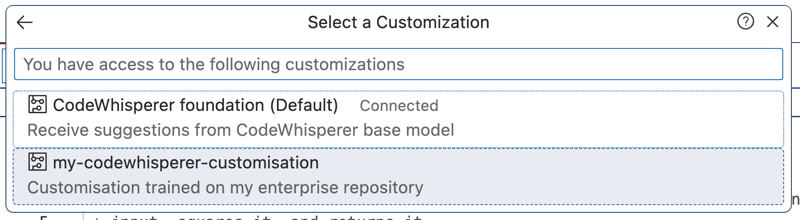

The source code that Amazon CodeWhisperer is trained on is great for most scenarios, but does not help when an organization has their own internal set of libraries, best practices and coding standards that must be followed. This is where the customization capability comes in. With this capability, you create a connection to your code repositories (either third party hosted or via an S3 bucket), and then train a customization from this codebase. This capability is in preview and available only in the Professional tier.

When you create a customization and assign it to a user, they are then able to select that customization in the editor and this will then be used to generate code suggestions.

CodeWhisperer Customizations were designed from the ground up with security in mind. This blog post gives more detail in this space.

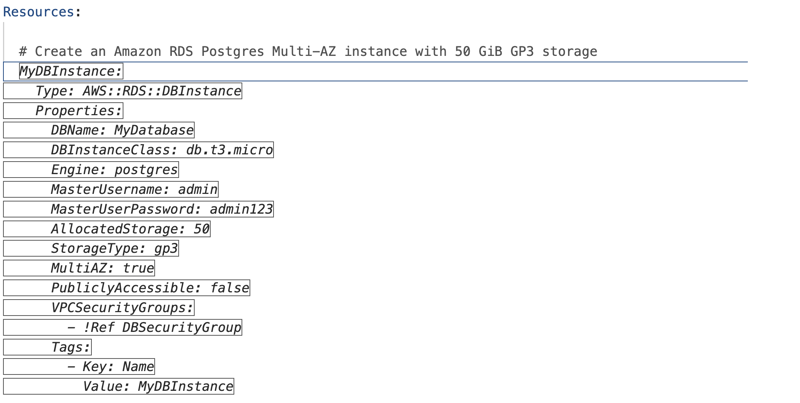

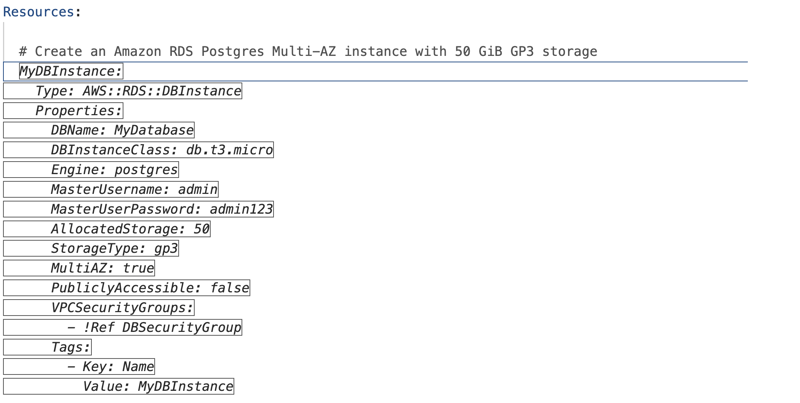

Infrastructure as Code Support

CodeWhisperer support for creating code extends beyond just programming languages and into Infrastructure as Code (IaC) tools such as CloudFormation, AWS CDK and Terraform. The screenshot below shows the specification of an RDS instance created as a resource in CloudFormation from a natural language prompt.

The example below uses a simple prompt to configure Terraform Cloud, and then create an EC2 instance using the AMI for Amazon Linux 2.

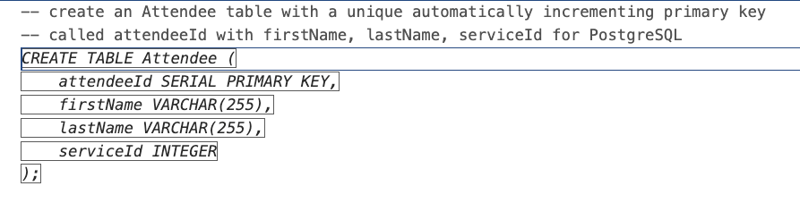

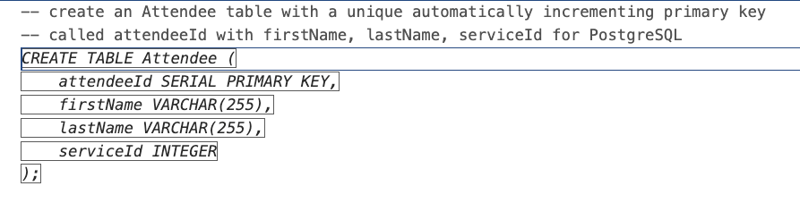

SQL Support

CodeWhisperer also supports the creation of SQL as a standard language for database creation and manipulation. This covers Data Definition Language commands (such as creating tables and views) as well as Data Manipulation Language commands (from simple inserts through to complex queries with joins across tables).

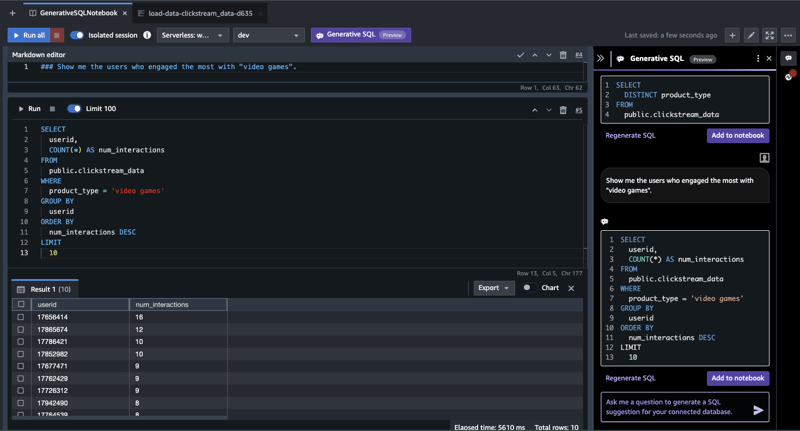

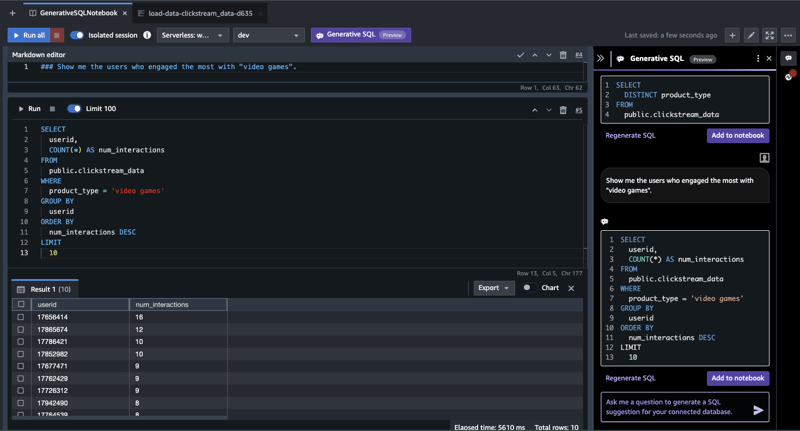

Generative AI can also help the developer by converting natural language to SQL across many AWS services. In the screenshot below, we are using Generative AI to generate queries from natural language against Amazon Redshift. These queries can be added directly to a notebook and then executed, all within the Redshift Query Editor V2.

It doesn't stop there - you can also use natural language to query Amazon CloudWatch Log Groups and Metrics, and AWS Config Advanced Queries.

CodeWhisperer on the Command Line

CodeWhisperer is also available on the command line. This allows you to write a natural language instruction which is converted to an executable shell snippet. It supports hundreds of popular CLIs. This reduces the overhead on the developer of having to remember these commands, or the context switching of navigating away from the IDE to look them up. You can also execute the commands directly, as we see below.

Application Understanding

One of the most valuable use cases for GenAI is helping to understand existing applications. A common problem is supporting an application with limited if any documentation, written in an unfamiliar programming language or style, and with no comments in the code.

Explaining Code

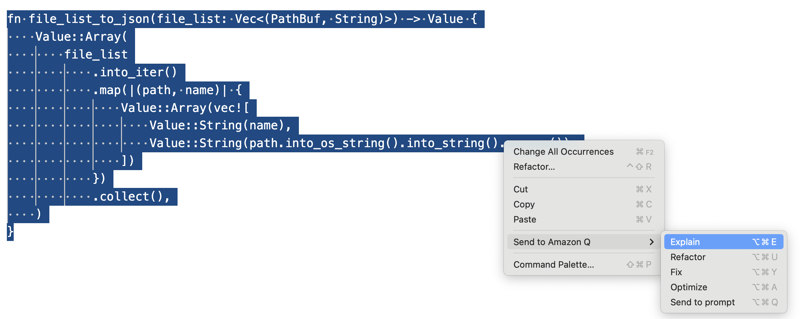

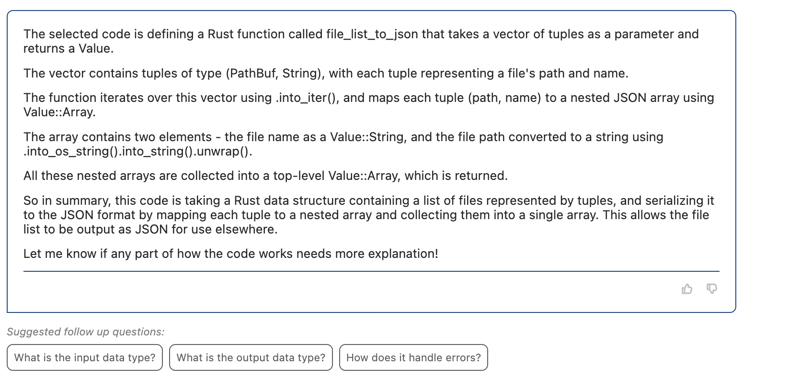

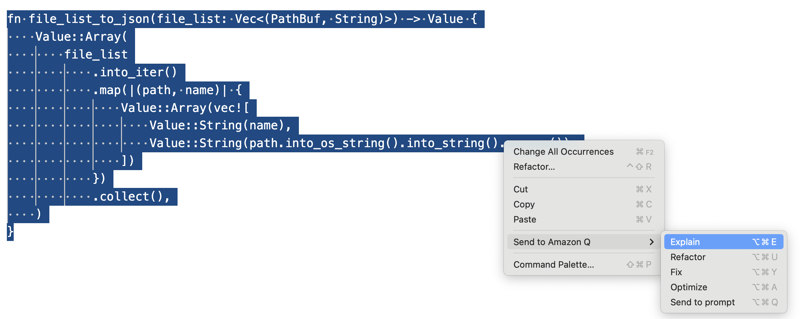

Working in combination with CodeWhisperer in your IDE, you can send whole code sections to Amazon Q and ask for an explanation of what the selected code does. To show how this works, we open up the file.rs file cloned from this GitHub repository. This is part of an open source project to host documentation of crates for the Rust Programming Language, which is a language we are not familiar with.

We select a code block from the file, right-click, and then send to Amazon Q to explain:

Amazon Q provides a detailed breakdown of the function that has been written in Rust, and the key activities that it carries out. What is really useful in this case, is Amazon Q suggests follow up questions to help you get an even better understanding of the code. This allows you to chat with and ask questions about the code segment:

Application Visualisation

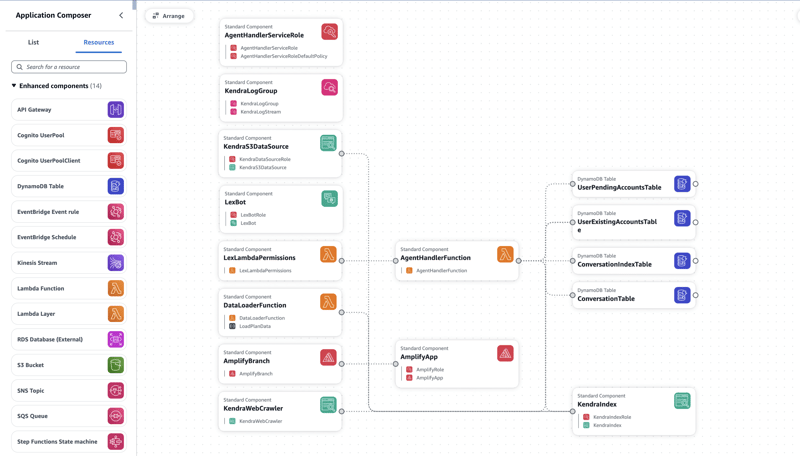

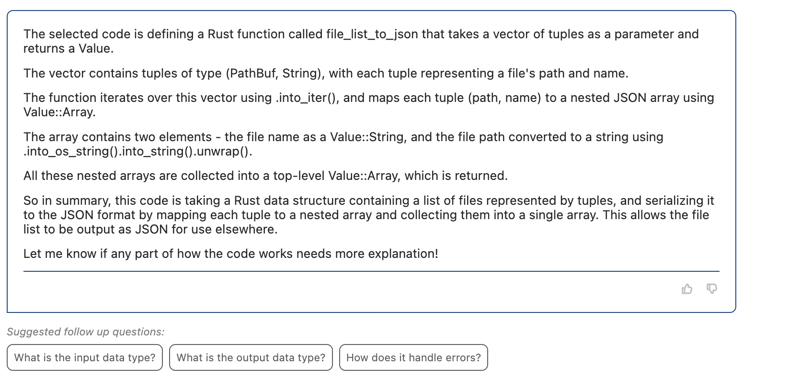

A new feature that is incredibly useful is to visualise how an application is composed using Application Composer directly within the IDE. At the end of last year, Application Composer announced support for all 1000+ resources supported by CloudFormation.

This now works for any application running in AWS, even if not originally deployed through CloudFormation, with the introduction of the AWS CloudFormation IaC Generator (for more information see Infrastructure as Code Generator). This allows you to generate a CloudFormation template. Within VSCode, you can select the template, right-click, and select "Open with Application Composer".

The screenshot below uses the CloudFormation template from an AWS samples application in GitHub which can be found here.

Application Modernisation

A massive problem for organisations is the amount of technical debt growing in legacy applications. The challenge here is how to modernise these applications. The starting point is to make sure you understand the application in question using the approaches above. In addition, a number of other capabilities are available.

Chat with Amazon Q

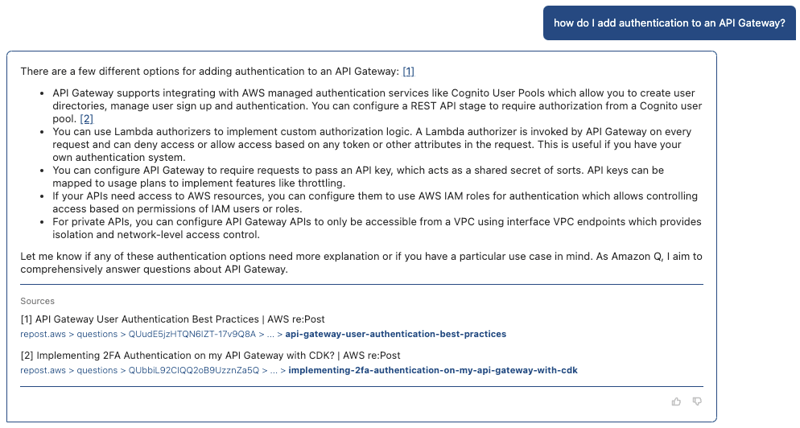

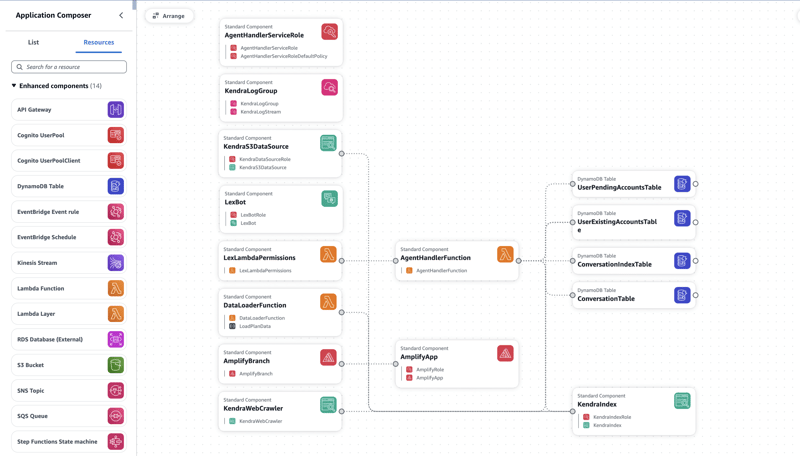

Amazon Q is available in both the console and the IDE to answer questions around AWS. This allows you to ask questions about AWS services, limits and best practices alongside software development.

The generated content returned contains links to the source articles, that allow you to do more in-depth reading to validate the response. Again, when writing code in the editor, this allows the developer to remain in the IDE to ask these questions, reducing the distraction and the context switching.

Code Transformation

Code Transformation is a formal feature of Amazon Q. It is currently available in preview with support to carry out complete application upgrades from Java 8 or Java 11 to Java 17. Coming soon is support to perform .NET Framework to cross-platform .NET upgrades to migrate applications from Windows to Linux faster. The video below shows the steps involved to automatically update a Java application.

The internal Amazon results are impressive, with 1000 production applications upgraded from Java 8 to Java 17 in just two days, with an average of 10 minutes to upgrade each application.

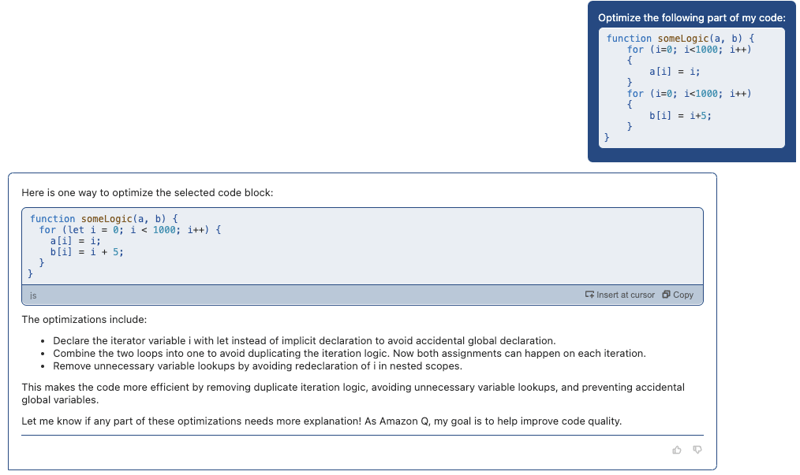

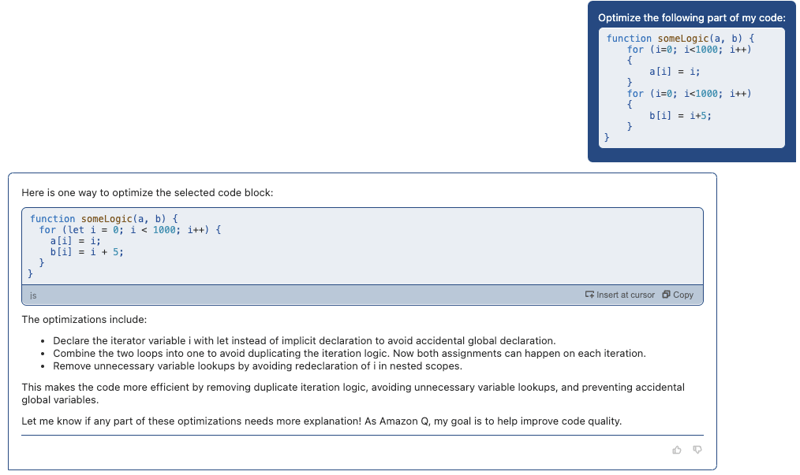

Code Optimisation

Code optimisation is a concept supported by Amazon Q through its built-in prompts. In the example below, we have inefficient code with two loops that could be combined into a single loop. This is correctly detected with suggestions made to optimise the code.

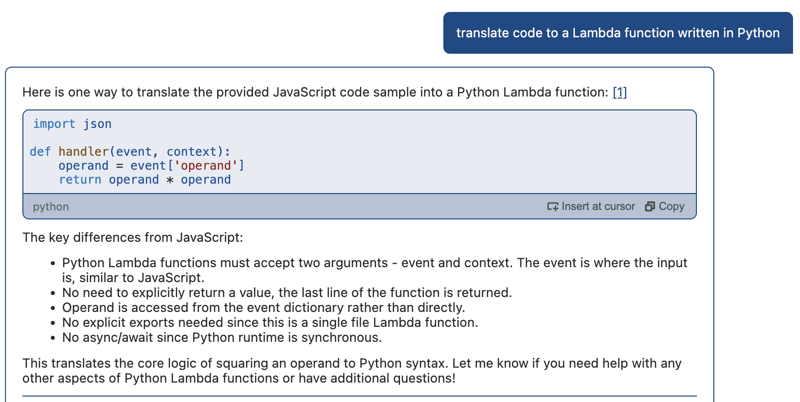

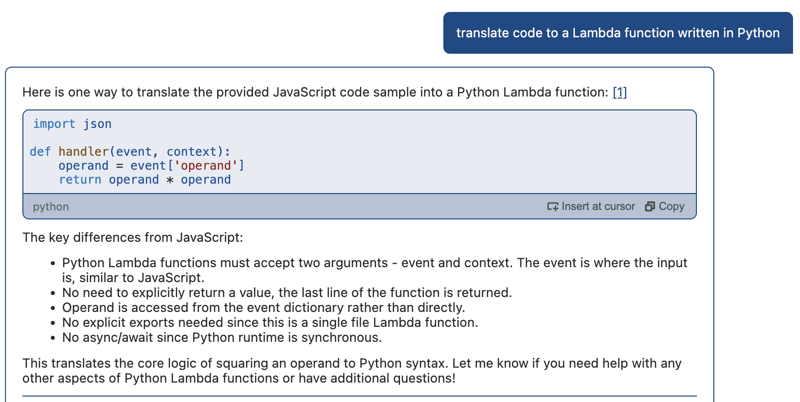

Code Translation

Code translation is another concept support by Amazon Q through prompts. As always, the accuracy and quality of the code generation is dependent upon the size and quality of the training data. In this context, there is more support for languages such as Java, Python and JavaScript than C++ or Scala. In the screenshot below, we have taken an AWS Lambda function written in JavaScript and asked Amazon Q to translate to Python.

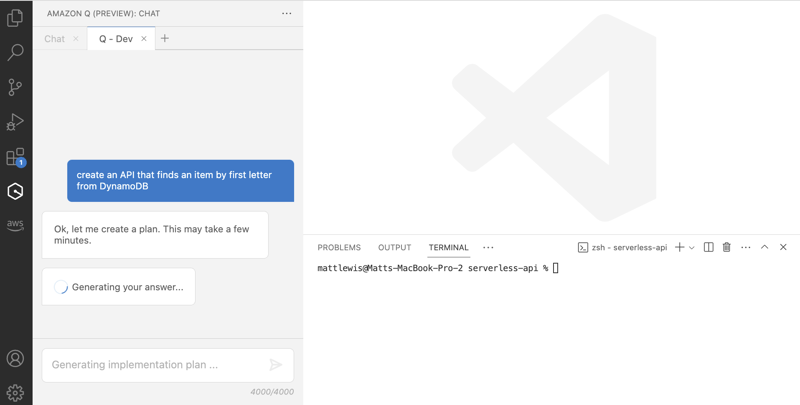

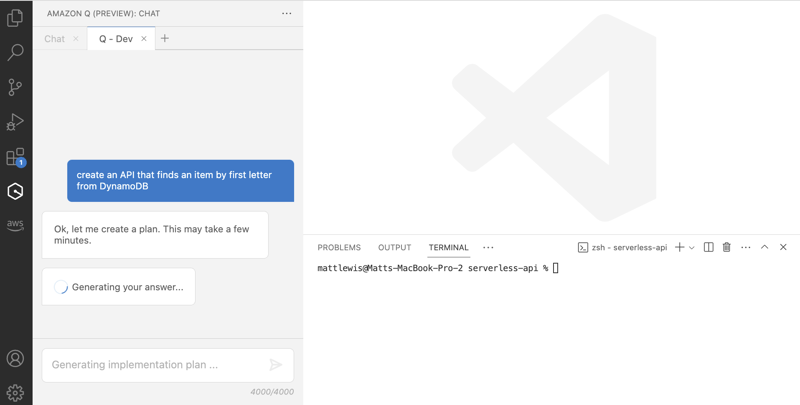

Feature Development

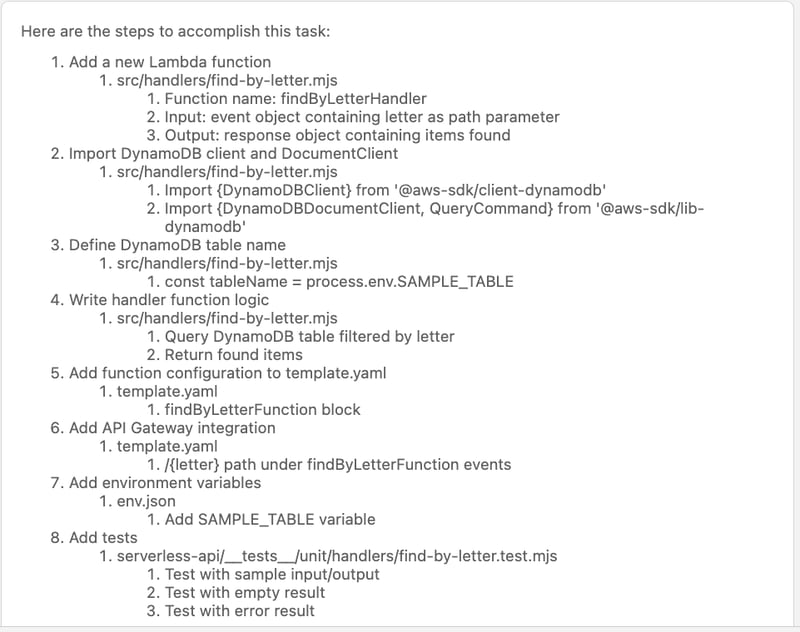

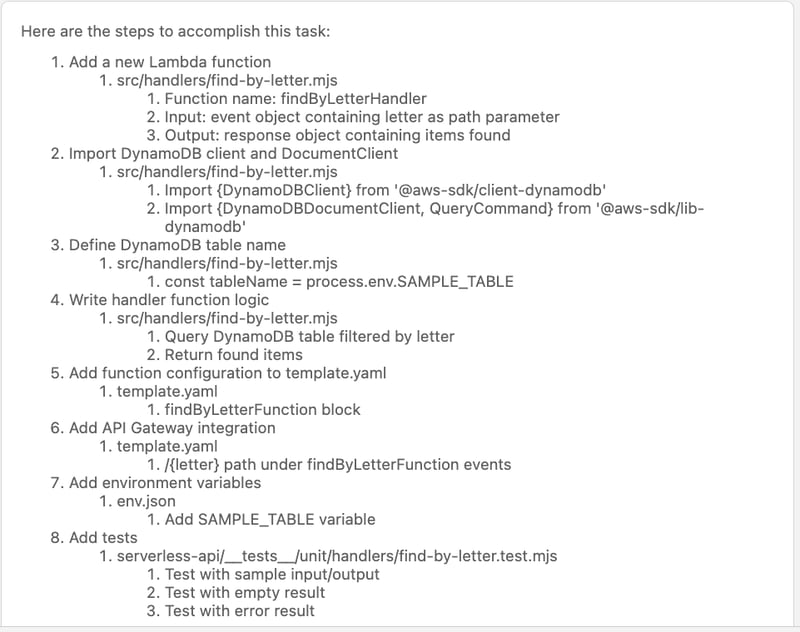

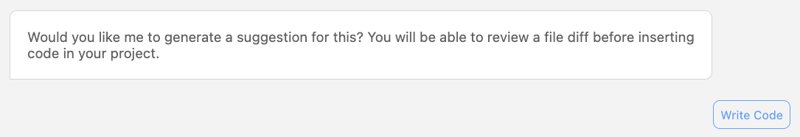

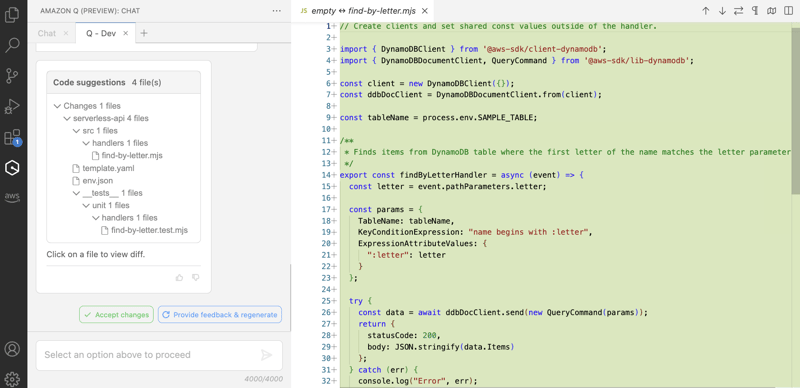

Feature Development is a formal feature of Amazon Q. You explain the feature you want to develop, and then allow Amazon Q to create everything from the implementation plan to the suggested code. For this example, we create an application using an AWS SAM quick start template for a serverless API. We then ask Q to create a new API for us.

Amazon Q uses the context of the current project to generate a detailed implementation plan as shown below.

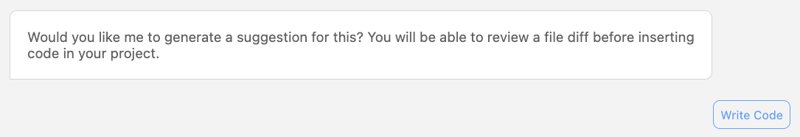

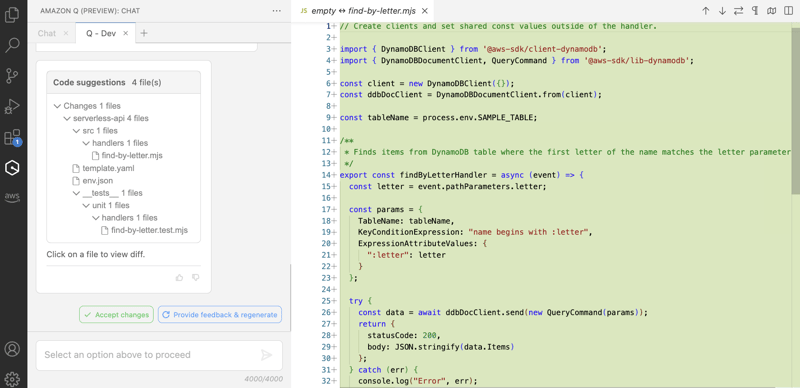

By selecting 'Write Code', Amazon Q then generates the code suggestions, using the coding style as already set out in the current project.

This results in the proposed code suggestions, whereby you can choose to click on each file to view the differences, and finally choose whether or not to accept the changes.

This has the promise of a hugely powerful feature. In the screenshots above, we have shown how Amazon Q has taken a natural language instruction, and created everything from the Infrastructure-as-Code, function implementation, and unit tests.

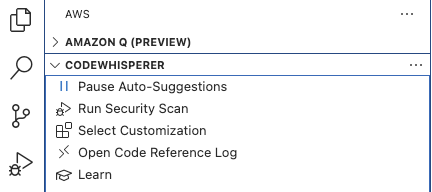

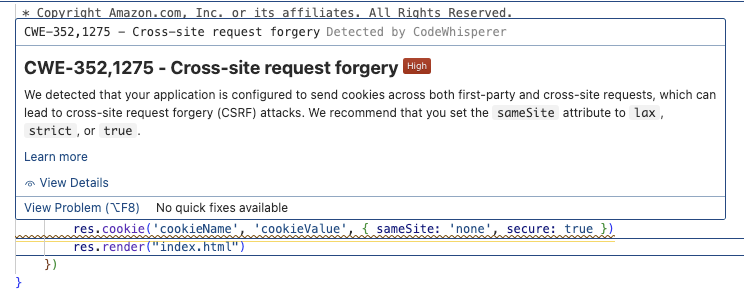

Code Vulnerabilities

It is critical to prevent vulnerabilities being present in your application, and the earlier these are detected and resolved in the development life cycle the better. CodeWhisperer can detect security policy violations and vulnerabilities in code using static application security testing (SAST), secrets detection, and Infrastructure as Code (IaC) scanning.

Within CodeWhisperer, you can select to run a security scan.

This performs the security scan on the currently active file in the IDE editor, and its dependent files from the project.

Security scans in CodeWhisperer identify security vulnerabilities and suggest how to improve your code. In some cases, CodeWhisperer provides code you can use to address those vulnerabilities. The security scan is powered by detectors from the Amazon CodeGuru Detector Library.

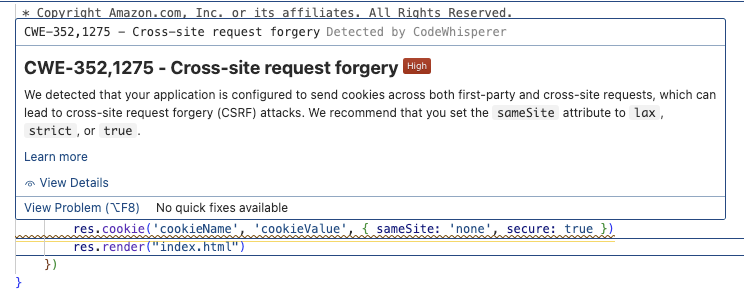

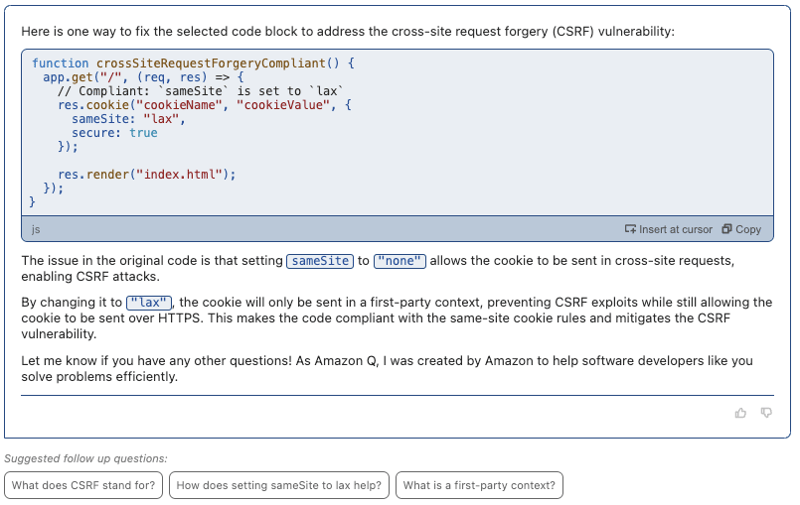

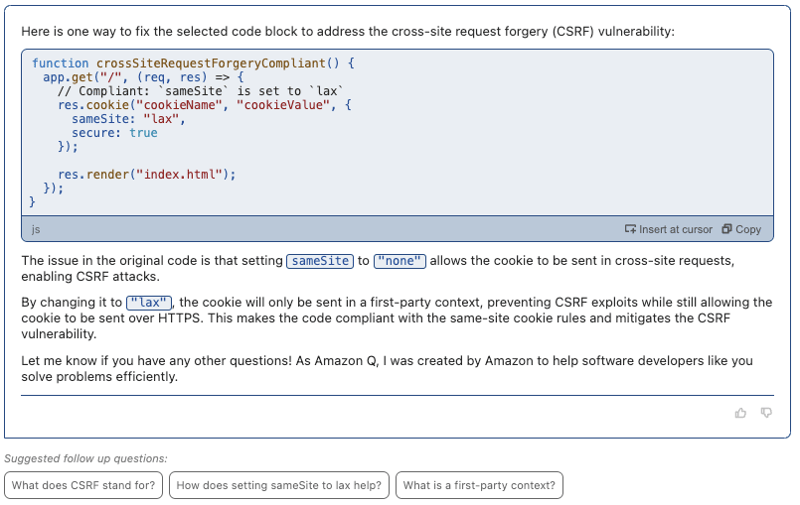

In the screenshot below, we use sample code that contains a vulnerability to Cross Site Request Forgery (CSRF), and this is picked up by the scan.

Having detected there is an issue, we select the function with the vulnerability, and send it to Amazon Q to fix. Amazon Q generates code that we copy or insert directly into the editor, as well as providing details about the issue and resolution, and suggesting follow up questions if we want to learn even more.

Debugging and Troubleshooting

Moving outside of the IDE and into the AWS console, AWS now provide situations where generative AI capabilities can be used to help debug problems.

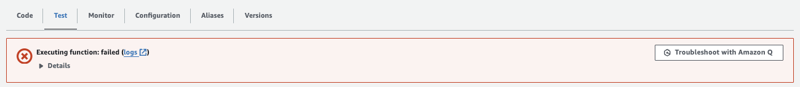

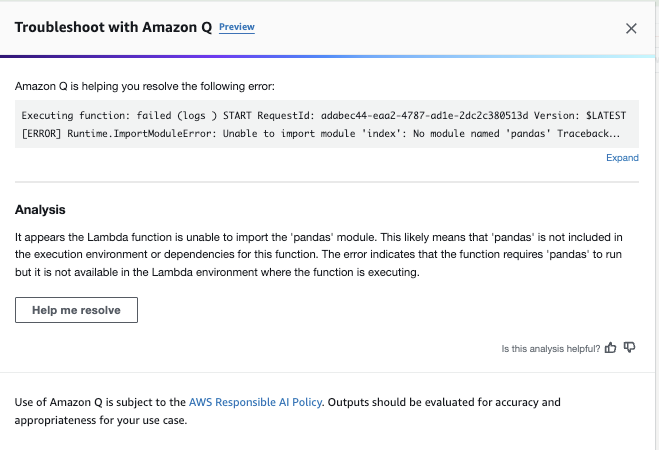

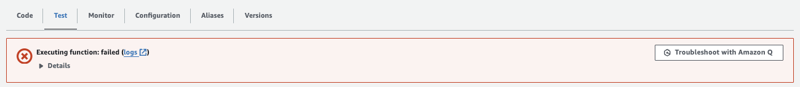

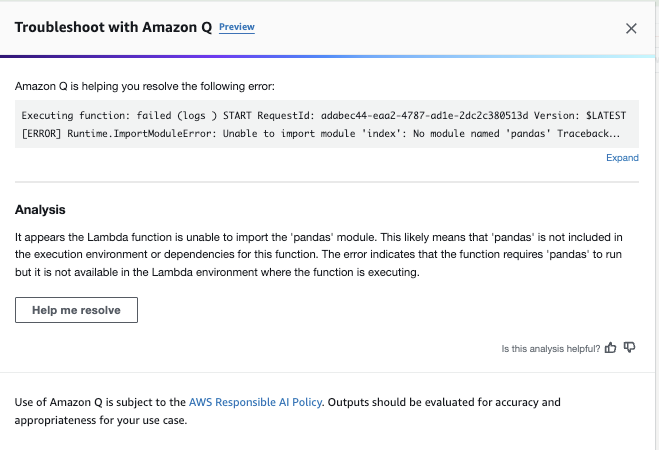

In the example below, we have tested an AWS Lambda function that is failing. This opens up a button we can select to get Amazon Q to help us with troubleshooting.

Amazon Q not only provides a summary of what the initial analysis of the problem is, but can then also be used to provide the steps required to resolve the issue.

Outside of the editor, Amazon Q can also help to troubleshoot network-related issues by working with Amazon VPC Reachability Analyzer. This allows you to ask questions in natural language as explained in this post introducing Amazon Q support for network troubleshooting.

Conclusion

The concept of generative AI is that the content generated is non-deterministic. This means the exact code suggested may be slightly different when executed against the same prompts. There are occasions when the suggested code may not compile or contain an invalid configuration. This means there is still a level of developer expertise required to drive the tooling and understand the content generated. There is also training required to understand how best to write comments to get the best suggestions, in effect a variation on prompt engineering. However, without question, the capabilities available today will increase your productivity when developing on AWS.

For edge cases, there are other alternatives available which is worth mentioning. CodeWhisperer abstracts away all of the complexity of dealing with LLMs. Amazon Bedrock allows you API access to supported models such as Claude and Llama 2. There are also open source code LLMs like StarCoder you can bring into Amazon SageMaker. This allows you more control on what dataset or instructions you might want to fine tune a base model, but brings with it higher cost and complexity.

Hopefully this post has given you a taster of many of the capabilities now available that form the next generation developer experience on AWS, and will encourage you to try it out for yourself.